Artificial Intelligence

By understanding and leveraging AI, you can drive innovation, enhance efficiency, and create new value for your customers and stakeholders.

What is Artificial Intelligence?

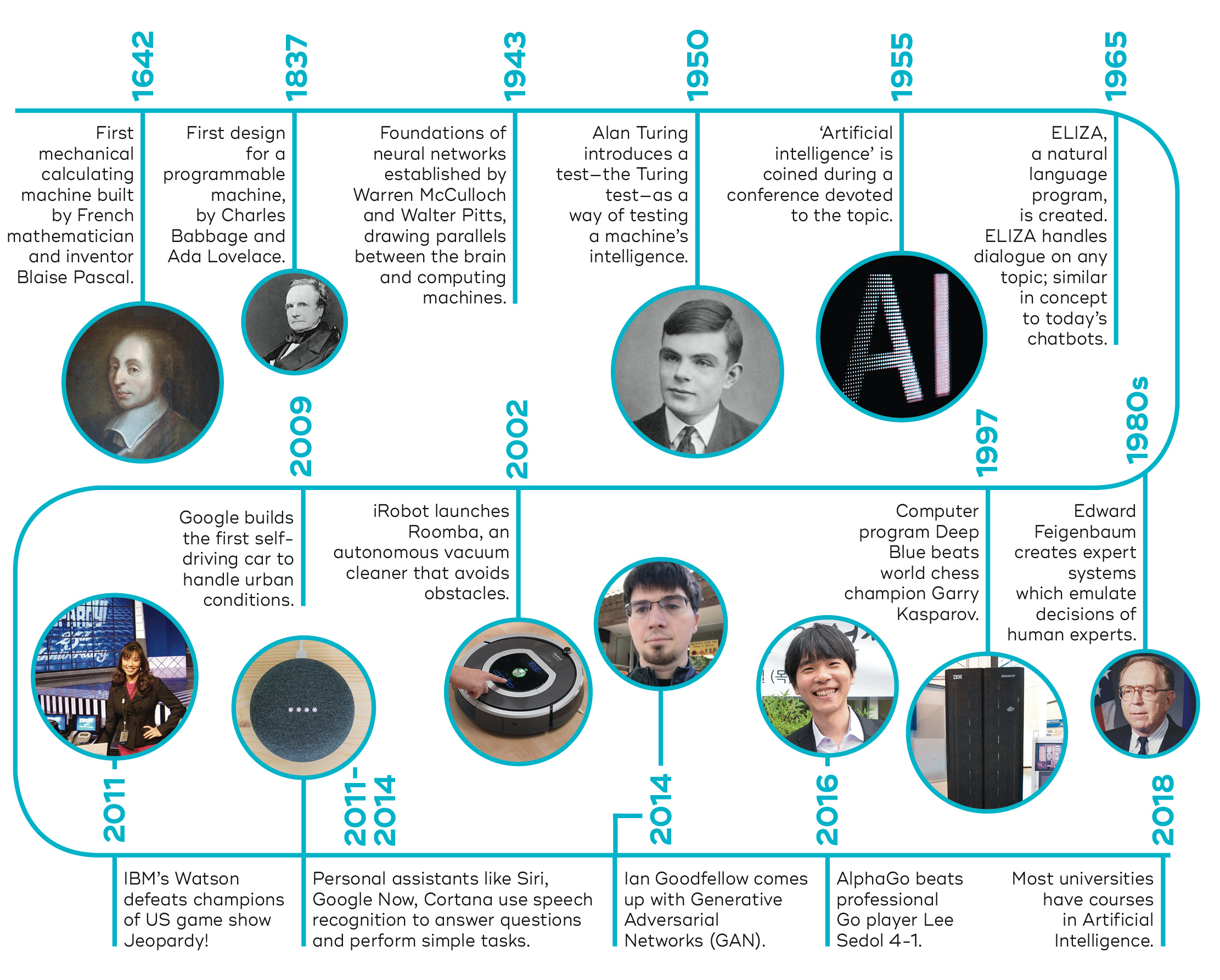

Artificial Intelligence refers to computer systems designed to perform tasks that typically require human intelligence. These tasks include visual perception, speech recognition, decision-making, and language translation. AI systems learn from data, identify patterns, and make decisions with minimal human intervention. The concept of AI dates back to the 1950s when computer scientists began exploring the possibility of creating machines that could "think." Early AI focused on rule-based systems and simple problem-solving algorithms.Types of AI

Narrow AI

Also known as Weak AI, this type is designed for a specific task. Examples include virtual assistants like Siri or Alexa, and recommendation systems used by Netflix or Amazon. Narrow AI simulates human cognition for specific purposes but lacks true understanding or consciousness. It's highly effective for automating time-consuming tasks and analyzing data in ways humans sometimes can't. It's designed to perform specific tasks within a limited scope:- Focuses on a single task or a narrow range of tasks

- Excels in its specific domain but lacks generalization

- Examples include voice assistants, recommendation systems, and spam filters

- Currently the most successful and widely implemented form of AI

General AI

This refers to AI systems with human-like cognitive abilities across a wide range of tasks. While still theoretical, General AI is the subject of intense research and development. General AI, or Strong AI, is a theoretical form of AI that would match human intelligence:- Capable of performing any intellectual task that a human can

- Possesses the ability to reason, solve problems, and think abstractly

- Can learn and apply knowledge across different domains

- Currently does not exist and is still in the realm of theoretical research

Superintelligent AI

Superintelligent AI, also known as Super AI or Artificial Superintelligence (ASI), is a hypothetical future AI that surpasses human intelligence:- Would possess intellectual powers beyond those of humans in all domains

- Capable of outperforming humans in cognition, problem-solving, and creativity

- Theoretically able to improve its own intelligence (recursive self-improvement)

- Raises significant ethical and existential questions for humanity

Machine Learning

Deep Learning

Large language models (LLMs)

These are text-oriented generative artificial intelligences, and they have been in mainstream headlines since OpenAI’s ChatGPT hit the market in November 2022. LLMs are trained on large volumes of text, typically billions of words, that are simulated or taken from public or private data collections.

Benefits for Organizations

Artificial Intelligence (AI) offers numerous capabilities that organizations can leverage to enhance their operations and drive growth. The main capabilities of AI in organizations include:

- Machine Learning: AI algorithms analyze data, identify patterns, and make predictions to support decision-making and process optimization1.

- Natural Language Processing (NLP): This capability enables businesses to understand and interpret human language, facilitating tasks such as sentiment analysis and automated report generation.

- Personalization: AI analyzes customer preferences and behaviors to create tailored experiences in marketing, product recommendations, and customer service.

- Process Automation: AI can automate repetitive tasks across various departments, including data entry, scheduling, and inventory management, increasing operational efficiency.

- Predictive Analytics: AI systems can forecast market trends, consumer needs, and potential risks, allowing businesses to make proactive decisions.

- Enhanced Customer Experience: AI-powered chatbots and virtual assistants provide instant responses to customer inquiries and offer personalized support.

- Advanced Marketing: AI-driven tools analyze consumer behavior to create highly targeted marketing campaigns and personalized content.

- Improved Security: Machine learning algorithms can detect unusual patterns and behaviors, enhancing cybersecurity and fraud detection capabilities.

- Research and Development: AI facilitates innovation by analyzing vast amounts of data and identifying novel solutions in various industries.

- Human Resources Management: AI assists in recruitment, talent management, and providing personalized experiences for employees.

- IT Operations: AI helps improve system performance, automate routine processes, and proactively identify and fix IT issues.

Check out our AI training

Everyday examples of Artificial Intelligence

Self-driving cars utilize AI to navigate roads and make real-time decisions. They employ computer vision, sensor fusion, and machine learning algorithms to detect obstacles, predict potential threats, and optimize routes. These vehicles can learn from driver behavior and adapt to various driving conditions, enhancing safety and efficiency on the roads.

Bots and digital assistants, such as Amazon's Alexa, Google Assistant, and Apple's Siri, use AI to understand and respond to natural language commands. They leverage natural language processing and machine learning to interpret user requests, access information, control smart home devices, and manage schedules. These assistants continuously learn from user interactions to improve their performance and personalization.

Recommendation engines employ AI algorithms to analyze user behavior, preferences, and historical data to suggest relevant content, products, or services. These systems are widely used by companies like Netflix, Amazon, and Spotify to enhance user engagement and drive sales. They utilize techniques such as collaborative filtering and content-based filtering to provide personalized recommendations.

AI-powered spam filters have revolutionized email security by using machine learning algorithms to identify and filter out unwanted or malicious messages. These systems analyze email content, sender information, and user behavior patterns to detect spam with high accuracy. They continuously learn and adapt to new spam tactics, providing more effective protection against phishing attempts and other email-based threats.

Smart home technology integrates AI to create more intelligent and responsive living spaces. AI is used in various aspects of home automation, including energy management, security systems, and entertainment. Smart thermostats learn user preferences to optimize energy consumption, while AI-enhanced security systems use facial recognition and anomaly detection to improve home safety. Voice-controlled assistants facilitate seamless interaction with smart home devices.

Health data analysis leverages AI to process vast amounts of medical information, identify patterns, and assist in diagnosis and treatment planning. Machine learning algorithms can analyze medical images, predict disease progression, and personalize treatment plans based on individual patient data. AI-powered health analysis tools help healthcare professionals make more informed decisions and improve patient outcomes.

Generative AI

- Foundation models: Pre-trained on broad, unlabeled data sets

- Large language models: Specialized in language-based tasks

- Neural networks: Designed to mimic the human brain's functioning

- OpenAI: Creator of GPT models and DALL-E

- Google (Alphabet): Offering integrated AI solutions through its Gemini models

- Microsoft: Partnering with OpenAI to provide enterprise-level generative AI services

- Anthropic: Focusing on AI safety and explainability

- Amazon Web Services (AWS): Providing the Bedrock service for accessing various AI models

To successfully implement generative AI in your organization:

- Assess Your Needs: Identify areas where AI can add value to your business processes

- Start Small: Begin with pilot projects to demonstrate value and gain organizational buy-in

- Invest in Data Infrastructure: Ensure robust data collection and management systems

- Develop AI Literacy: Foster a culture of continuous learning about AI technologies

- Partner Strategically: Consider collaborations with AI providers or tech companies

- Address Ethical Concerns: Develop guidelines for responsible AI use and data privacy

AI challenges and risks

Artificial intelligence presents numerous challenges and risks as it continues to advance and integrate into various aspects of our lives. One of the primary concerns is the lack of transparency and explainability in AI systems. Many AI models, especially deep learning algorithms, operate as "black boxes," making it difficult to understand how they arrive at their conclusions. This opacity can lead to issues of trust and accountability, particularly in critical applications like healthcare and finance.

Data protection and privacy remain significant challenges in AI development and deployment. As AI systems process vast amounts of personal information, ensuring compliance with regulations like GDPR and CCPA becomes crucial. The potential for data breaches and misuse of personal information raises ethical concerns and requires robust security measures to protect individuals' privacy rights.The issue of bias in AI algorithms is another pressing concern. AI systems can inadvertently learn and perpetuate biases present in their training data, leading to unfair or discriminatory outcomes. This can have serious implications in areas such as hiring practices, loan approvals, and criminal justice, potentially exacerbating existing societal inequalities.

The socioeconomic impact of AI, particularly in terms of job displacement, is a growing concern. As AI automates more tasks, there is a risk of widespread job losses across various industries. This could lead to increased economic inequality and social unrest if not properly managed through reskilling programs and policy interventions.

Lastly, the potential for AI to be used maliciously poses significant risks. The creation of deepfakes, sophisticated cyberattacks, and autonomous weapons systems raise serious ethical and security concerns. As AI capabilities continue to advance, ensuring responsible development and use of these technologies becomes increasingly important to mitigate potential harm to individuals and society as a whole.

Ethical considerations when using generative AI

Another critical ethical consideration is the creation and distribution of harmful content. Generative AI can produce convincing fake images, videos, and text, which could be used for misinformation, fraud, or other malicious purposes. This capability raises questions about accountability and the need for robust safeguards to prevent the spread of harmful or misleading information. Additionally, there are concerns about bias and fairness, as AI models can perpetuate and amplify existing societal biases present in their training data.

Copyright and intellectual property issues also present significant ethical challenges. Generative AI models are trained on vast amounts of existing content, raising questions about the ownership and fair use of the generated outputs. This blurs the lines between original creation and AI-assisted production, potentially infringing on creators' rights and complicating the attribution of authorship. Moreover, the potential for job displacement due to AI automation and the need for transparency in AI decision-making processes are additional ethical considerations that must be addressed as generative AI continues to evolve and integrate into various aspects of society.

Building an AI ready workforce

By implementing these suggestions, organizations can create a workforce that is well-equipped to leverage AI technologies effectively, driving innovation and maintaining a competitive edge in the rapidly evolving digital landscape. Organizations can equip their staff with the right skills to use and leverage AI effectively through several strategic approaches:

- Implement comprehensive AI training programs: Develop tailored AI training courses that cover everything from AI fundamentals to advanced applications. These programs should cater to different roles and skill levels within the organization, ensuring that both technical and non-technical staff can benefit from AI education.

- Offer personalized learning paths: Utilize AI-powered platforms to create customized learning experiences for employees. These systems can analyze individual skills, interests, and company needs to recommend relevant courses and materials, adapting the difficulty level as employees progress.

- Foster a culture of continuous learning: Encourage ongoing skills development by providing access to a variety of AI resources, including online courses, workshops, and industry conferences. Promote internal knowledge sharing and create opportunities for employees to apply their new AI skills to real-world projects.

- Collaborate with AI experts and institutions: Partner with universities, AI research centers, and industry leaders to provide employees with access to cutting-edge knowledge and practical insights. This can include guest lectures, mentorship programs, or collaborative projects that expose staff to the latest AI trends and applications.

- Conduct regular skills assessments and gap analyses: Use AI-powered tools to identify skill gaps within the organization and track employee progress. This data can inform future training initiatives and help leaders make informed decisions about workforce development and AI integration strategies.

Check out our AI training

The AI Act

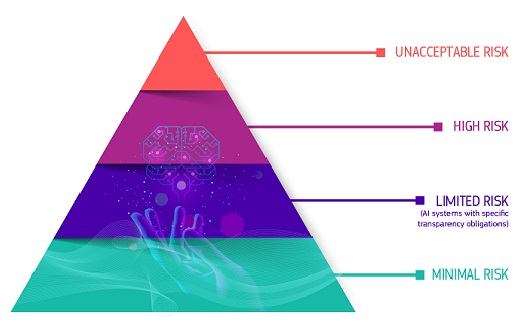

- Risk-based approach: AI systems are categorized based on their potential risks, with stricter regulations for high-risk applications.

- Prohibited practices: Certain AI applications are banned, such as social scoring and untargeted facial recognition in public spaces.

- Obligations for high-risk AI: Stringent requirements for safety, transparency, and accountability.

- Governance system: Establishment of a European Artificial Intelligence Board for oversight.

Risk hierarchy underpinning the EU AI Act (Dept of Trade, Enterprise & Employment)

Unacceptable risk

These are a set of eight harmful uses of AI that contravene EU values because they violate fundamental rights. These AI uses will be prohibited and include:

- Subliminal techniques likely to cause a person, or another, significant harm

- Exploiting vulnerabilities due to age, disability or social or economic situation

- Social scoring leading to disproportionate detrimental or unfavourable treatment

- Profiling individuals for prediction of criminal activity

- Untargeted scraping of facial image

- Inferring emotions in work or education

- Biometric categorisation of race, religion, sexual orientation

- Real-time remote biometric identification for law enforcement purposes

High risk

There are two high-risk categories of AI usage. The first category relates to the use of AI systems that are safety components of products, or which are themselves products, falling within the scope of certain Union harmonisation legislation listed in Annex I of the Act, for example toys and machinery. The provisions on product-linked high-risk AI systems apply from August 2027.

The second category relates to eight specific high-risk uses of AI listed in Annex III of the Act. These are AI systems that potentially create an adverse impact on people's safety or their fundamental rights, as protected by the EU Charter of Fundamental Rights, and are high-risk. The provisions on high-risk uses apply from August 2026.

Specific transparency risk

The AI Act introduces specific transparency requirements for certain AI applications where, for example, there is a clear risk of manipulation such as the use of chatbots or deepfakes.

Minimal risk

Most AI systems will only give rise to minimal risks and consequently, can be marketed and used subject to the existing legislation without additional legal obligations under the AI Act.

AI Governance for Directors

AI governance is a critical framework that guides the responsible development and use of artificial intelligence within organizations. For directors, it's essential to grasp the full scope of AI applications in their business, enhance their own AI literacy, and develop robust oversight strategies. This includes assessing current and potential AI use cases, integrating AI into the organization's core values and vision, and carefully evaluating associated risks and ethical considerations.

Directors need to understand the strategic implications of AI for their organizations and develop a robust governance framework to harness its benefits responsibly. They should familiarize themselves with key AI concepts and issues, recognizing that while they don't need to be technical experts, they must have sufficient knowledge to make informed decisions and engage in meaningful discussions with advisors and stakeholders. Directors should also be aware of the unique characteristics of AI compared to traditional technology, including its potential for rapid evolution and far-reaching impacts across various aspects of business operations. From a governance perspective, directors should focus on several key areas. These include establishing clear roles and responsibilities for AI decision-making within the organization, assessing and developing the company's AI capabilities and skills, and implementing appropriate governance structures. They should also oversee the development of AI-specific principles, policies, and strategies that align with the organization's values and long-term goals. Check out our training offers here.

Additionally, directors need to ensure that proper practices, processes, and controls are in place to manage AI-related risks, including data privacy, security, and ethical considerations. Directors must also prioritize stakeholder engagement and impact assessment, considering how AI implementations may affect employees, customers, and other key stakeholders. They should advocate for the development of supporting infrastructure, such as maintaining an AI inventory and robust data governance framework. Finally, directors need to establish effective monitoring, reporting, and evaluation mechanisms to track AI performance, outcomes, and potential risks. By focusing on these areas, directors can help their organizations leverage AI for strategic advantage while mitigating associated risks and ensuring responsible use of the technology.

To ensure AI remains a key focus, boards should make it a regular agenda item, potentially creating dedicated AI subcommittees or expanding existing committee mandates. Continuous learning about AI trends, engaging with management on AI strategies, and fostering a culture of responsible innovation are crucial. Boards must also prioritize regular reviews of AI risk assessments, ethical implications, and performance metrics. By implementing these measures, boards can effectively oversee AI integration, manage risks, and leverage AI's potential to drive organizational success. This approach not only ensures compliance with evolving regulations but also positions the organization as a leader in ethical and effective AI utilization.

To ensure successful AI management, boards must first prioritize building their own AI literacy and competency. This involves gaining a foundational understanding of AI concepts, potential applications, and associated risks. Boards should invest in ongoing education and training to stay current with AI developments and their implications for the organization. Additionally, they should consider recruiting board members with AI expertise or engaging external advisors to provide specialized insights.

Boards need to establish a robust AI governance framework that aligns with the organization's overall strategy and values. This framework should include clear policies on ethical AI use, data privacy, security measures, and guidelines for AI procurement and development. Boards should also ensure that proper oversight mechanisms are in place, such as designating a specific committee or working group to focus on AI-related matters. Regular AI audits and risk assessments should be conducted to identify potential issues and address them proactively.

Finally, boards must foster a culture of responsible AI throughout the organization. This involves promoting ethical AI practices, emphasizing accountability and transparency, and ensuring that AI initiatives are aligned with long-term business objectives. Boards should work closely with management to develop comprehensive AI strategies, assess the organization's AI readiness, and allocate necessary resources for AI implementation. By taking these steps, boards can position their organizations to leverage AI's potential while effectively managing associated risks and challenges. Check out this board AI readiness self assessment.

| Readiness Criteria | Description |

| AI Strategy Alignment | Clearly defined role of AI in company strategy and alignment with long-term objectives |

| Board AI Literacy | Board members' understanding of AI concepts, applications, and implications |

| AI Governance Framework | Established policies and procedures for ethical AI use and risk management |

| AI Talent and Expertise | Availability of necessary AI skills within the organization or through external partnerships |

| Data Governance | Robust data management practices to support AI initiatives |

| AI Risk Assessment | Regular evaluation of AI-related risks and mitigation strategies |

| Ethical AI Practices | Guidelines and oversight for responsible and unbiased AI development and deployment |

| AI Infrastructure Readiness | Evaluation of IT systems and infrastructure to support AI implementation |

| Stakeholder Engagement | Consideration of AI's impact on employees, customers, and other stakeholders |

| Continuous Learning and Adaptation | Commitment to ongoing AI education and adjustment of strategies as technology evolves |

Check out our AI for directors training